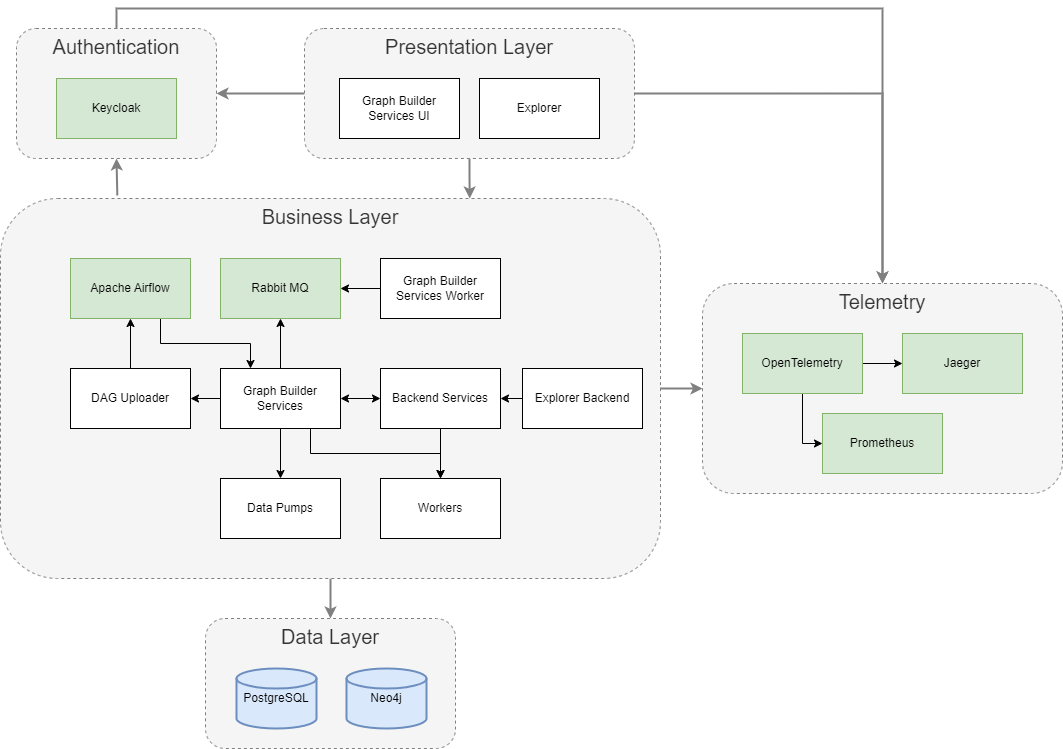

1.2 General Architecture and Building Blocks

All services in the architecture above, utilize Docker containers. The main reason is to keep them isolated and build loosely coupled services deployed independently. This helps simplify and accelerate the workflow while giving the freedom to innovate with a custom choice of tools, application stacks, and deployment environments for each application. In this way components are isolated from each other and bundle their software, libraries, and configuration files. The components communicate with each other through RESTful methods, which ensure interoperability between them. Architectural constraints provided through REST emphasizes scalability of component interactions, the generality of interfaces, and independent deployment of components. External communication is secured by a gateway utilizing nginx.

External Software

Data Context Hub uses some 3rd party software components which are described in the following sections.

PostgreSQL

PostgreSQL is a powerful, open source object-relational database system. It has a strong reputation for reliability, feature robustness, and performance. All the relational data for Data Context Hub are stored in PostgreSQL.

Neo4j

Neo4j (license) is a graph database management system developed by Neo4j, Inc. It is described as an ACID-compliant transactional database with native graph storage and processing by its developers. Neo4j is implemented in Java and accessible from software written in other languages using the Cypher query language through a transactional HTTP endpoint, or through the binary Bolt protocol. The integrated data of the Data Context Hub are stored in Neo4j in a schema-free form as a knowledge graph, which is accessible through a single access layer.

RabbitMQ

RabbitMQ is an open source message broker that implements Advanced Message Queuing Protocol (AMQP). It is lightweight and easy to deploy on premises and in the cloud. It supports multiple messaging protocols and can be deployed in distributed and federated configurations to meet high-scale, high-availability requirements. GBS is using RabbitMQ for sending messages to trigger asynchronous tasks in GBS Worker.

Apache Airflow

Apache Airflow is an open-source workflow management platform for data engineering pipelines. It is a platform created by the community to programmatically author, schedule and monitor workflows. Workflows are authored as Directed Acyclic Graphs (DAGs) of tasks. The Airflow scheduler executes these tasks on an array of workers while following the specified dependencies. Rich command line utilities make performing complex surgeries on DAGs a snap. The rich user interface makes it easy to visualize pipelines running in production, monitor progress, and troubleshoot issues when needed. GBS uses Apache Airflow to automate and schedule data import processes through these workflows.

Keycloak

Keycloak is an open source identity and access management solution aimed at modern applications and services. It makes it easy to secure applications and services with little to no code. Please check Keycloak section for more information.

Open Telemetry

Open Telemetry is a collection of open source tools, APIs, and SDKs to instrument, generate, collect, and export telemetry data. It uses open-standard semantic conventions to ensure vendor-agnostic data collections which can be send to different observability backends.

Jaeger

Jaeger is an open source distributed tracing system used for monitoring and troubleshooting microservices-based distributed systems.

Prometheus

Prometheus is an open-source systems monitoring and alerting toolkit. It collects and stores metrics as time series data alongside optional labels.

Internally Developed Software

Following sections offer a quick overview of the remaining Data Context Hub components. Refer to the linked sections to get more in-depth information.

Graph Builder Services (GBS)

Graph Builder Services is the core component of the Data Context Hub. It includes all logic necessary to import data using Data Pumps and process data to the knowledge graph stored in Neo4j. GBS uses RabbitMQ for sending messages to trigger asynchronous tasks in GBS Worker and Apache Airflow to automate and schedule data import processes through workflows uploaded via DAG Uploader. It also acts as a backend for the GBS UI.

Graph Builder Services UI (GBS UI)

Graph Builder Services UI is the frontend aimed at data engineers. All functionality implemented in GBS can be used through this UI. It allows to define data structures tailored to the data that is going to be imported through Data Pumps, create load plans which are executed by Apache Airflow, define access rules for the Graph Security Layer and much more.

Graph Builder Services Worker (GBS Worker)

The purpose of Graph Builder Services Worker is to consume messages sent to RabbitMQ by GBS and asynchronously execute importing and processing tasks.

Data Context Hub Backend Services (EBS)

The Data Context Hub Backend Services is a REST API offering endpoints to query data from the knowledge graph which can be used to implement custom frontends. Graph related endpoints apply rules defined in the Graph Security Layer.

Explorer & Explorer Backend

Explorer is where the knowledge graph can be explored. By utilizing its dedicated Explorer Backend, it is possible to easily traverse the graph and explore how data is connected to each other.

DAG Uploader

DAG Uploader is a Flask based Python application that is used to upload generated DAG files to the Airflow from GBS.

Data Pumps

Data Pumps are special isolated plug-and-play components to import data from different sources, please check Data Pumps for more information.

Workers

Workers are isolated applications that can be used by the Data Context Hub. Workers can be used either through GBS or through Data Pumps for necessary tasks. They are meant to be deployed and run separately from the Data Context Hub stack with as much as possible independence. They are loosely integrated with GBS and their deployment can currently be performed separately from the Data Context Hub stack.

There are currently two available workers that are ready to use:

- Expression: This worker is needed for Expression Evaluation

- GraphSecurity: This worker is needed for Graph Security Layer

Dependencies

Internally developed components also use 3rd party packages. Each component offers a way to display their respective licenses:

| Component | Licenses |

|---|---|

| GBS UI and Explorer | {{ui-url}}/LICENSES.html |

| GBS and EBS API | {{api-url}}/api/system/licenses |

| Workers | {{worker-url}}/licenses |